Over the years, deepfake has become a menace rather than just an editing tool for entertainment. During the 2016 U.S. Presidential Election, deepfake — altered/ manipulated videos using artificial intelligence were circulated on social media to spread fake news. To address the growing concern, ahead of the 2020 U.S. Elections in November, Microsoft is set to launch two new tools that can be used to verify if the video has been altered.

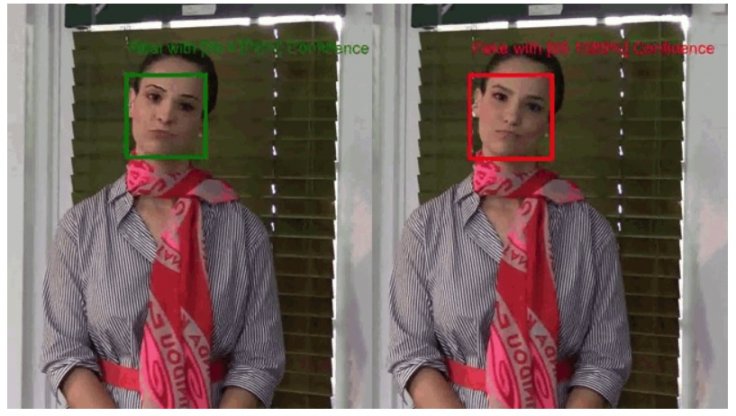

The first tool named, Microsoft Video Authenticator (MVA), is for general users who can analyze a video to see if it is artificially manipulated. MVA will give a confidence score or percentage chance for photos while for videos the same score will be in real-time for every frame.

The second tool will be aimed at businesses through its cloud service Azure. It will allow creators to add digital certificates of authenticity to videos and images. While the photo is circulated online, the certificate will stay in the metadata of the video/image and a browser extension can be used to get information about who originally made the content and if it was altered later.

The tool will look at minute details and imperfections in videos and images by "detecting the blending boundary of the deepfake and subtle fading or greyscale elements" that human eyes can miss out on. Using data from FaceForensic++, Microsoft developed the tool and tested it on the Deepfake Detection Challenge.

"In addition to fighting disinformation, it helps to protect voting through ElectionGuard and helps secure campaigns and others involved in the democratic process through AccountGuard, Microsoft 365 for Campaigns and Election Security Advisors. It's also part of a broader focus on protecting and promoting journalism," Microsoft said in a blog post.

Project Origin

Microsoft has also partnered with Project Origin, an international consortium of media companies that includes BBC, New York Times, CBC, AFP, Reuters, Wall Street Journal among others. The coalition will alert each other of misinformation regarding Coronavirus, U.S. Elections and other important events through Trusted News Initiative.

The coalition will attach a digital watermark to the original content that will degrade if manipulated. It will test Microsoft's tool. "In the months ahead, we hope to broaden work in this area to even more technology companies, news publishers and social media companies," Microsoft said.

Challenges Ahead

However, deepfake technology gets sophisticated every day with the advancement of artificial intelligence. Microsoft understands the difficulty its tool will have in the coming days to actually authenticate every content. The tech giant hopes its tool can catch up with the technology and stop the spread of misinformation.

"The fact that they're generated by AI that can continue to learn makes it inevitable that they will beat conventional detection technology. However, in the short run, such as the upcoming U.S. election, advanced detection technologies can be a useful tool to help discerning users identify deepfakes," Microsoft said.

In recent times, Facebook and Twitter have started flagging content that is misleading and contains disinformation. Facebook has also banned deepfake media while Twitter flags such content by labeling them as "manipulated and synthetic media". Reddit has also announced a ban on such content recently.