Automation is a new trend that is likely to last forever. From smart home systems to industries, robots have replaced humans for many jobs. At home, the scenario isn't very different. Instead of mopping and cleaning the floors yourself, you can leave it up to the robot vacuum cleaner. It will do the job just about fine. But according to a new study, the very robot friend can be manipulated to spy on you.

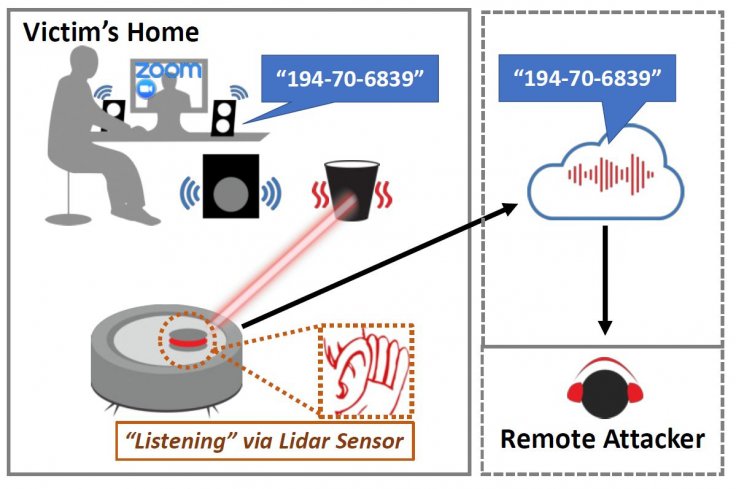

Robot vacuum cleaners use LiDAR (light detection and ranging) sensors to navigate and map the floor plan. But those sensors could be repurposed to listen to conversations. Called LidarPhone, attackers could utilize the sensor and convert it into a laser microphone according to researchers from the National University of Singapore (NUS) and the University of Maryland in the U.S.

"The proliferation of smart devices including smart speakers and smart security cameras has increased the avenues for hackers to snoop on our private moments. Our method shows it is now possible to gather sensitive data just by using something as innocuous as a household robot vacuum cleaner," said Sriram Sami, an NUS student, who worked on the project.

How Does It Work?

Using a laser to eavesdrop isn't new. The technique was used by intelligence agents during the Cold War to spy on each other. By pointing a laser at the window, agents could see how the glass vibrated. The vibrations were later decoded to decipher the conversation. For the experiment, researchers used Xiaomi's Roborock vacuum cleaners.

As the robot cleaner maps the floor by pointing lasers at nearby objects such as dustbins, desks, speakers, or even takeaway bags, the researchers were able to obtain the original sound that vibrated on the object. They found that glossy polypropylene bags were the best reflector of sound while glossy cardboard was the worst.

Sami said that by using applied signal processing and deep learning algorithm, they could recover audio data. At times, audio data could also contain sensitive information such as credit card numbers or other critical information. The team managed to get 90-percent accurate readings of the audio data.

Conditions Apply

While the LidarPhone attack is a practical method, it will only work if some conditions are met. The most important step is to modify the robot cleaner's firmware through malware. Only then, the LiDAR sensors could be repurposed. It will also solve another problem. As robot cleaners rotate, the audio data points would reduce, making the spying job difficult. The malware can stop the rotation of the cleaner, pointing to the best possible object which will vibrate the sounds in the room, reported ZDNet.

The researchers added that the malware should also contain the address of a remote server to upload the laser data as robot cleaners do not have surveillance-grade laser microphones. The data then needs to be processed to get the best sound quality.

With all those problems solved and conditions met, a robot cleaner could be a surveillance tool but for now, it is purely academic. But with Android, Windows and Linux malware already doing the job of spying on people by hacking smart devices, phones and computers, hacking a robot cleaner may not be feasible.

Nevertheless, the proof-of-concept attack will help companies that develop such products to strengthen security measures and prevent such threats. "Our work demonstrates the urgent need to find practical solutions to prevent such malicious attacks," Sami said.

"In the long term, we should consider whether our desire to have increasingly 'smart' homes is worth the potential privacy implications. We might have to accept that each new Internet-connected sensing device brought into our homes poses an additional risk to our privacy, and make our choices carefully," said NUS' Assistant Professor Jun Han, who led the research project.